Even if Calvin finds the correct, feasible tour traversing the 23+ 'cities', it is never going to be Hobbes-optimal.

(pic source: twitter)

Saturday, November 29, 2014

Thursday, November 20, 2014

The Contextual Optimization of Sanskrit

I'd written a blog for the INFORMS conference earlier this month based on my practice perspective, which emphasized the importance of contextual optimization rather than despairing over the 'not infallible' theoretical worst-case nature of certain mathematical problems. This is something well-internalized by those in the large-scale and non-convex optimization community, where 'NP-Hardness' is often the start, rather than the end point of R&D.

The wonderful 'Philip McCord Morse Lecture' at the recently concluded INFORMS conference in San Francisco by Prof. Dimitris Bertsimas of MIT touched upon this point, and the 'conclusions' slide in the talk explained this idea really well. To paraphrase, 'tractability of a problem class is tied to the context - whether the instances you actually encounter can be solved well enough'. I mentioned the Sulba Sutras in that blog - a well known body of work that epitomizes the Indian approach to mathematics as Ganitha - the science of computation. The genius, Srinivasa Ramanujan, was a relatively recent and famous example of a mathematician hailing from this tradition. The Indian approach is often algorithmic and more about rule generation than infallible theorem proving. Not that Indians shied away from proof ('Pramaana'. For example, see 'Yuktibhasa'). As I understand it, this sequential process of discovery and refinement does not lose sleep over theoretical fallibility, and consists of:

a) in-depth empirical observation of, and a deep meditation on facts,

b) insightful rule generation,

c) iterative, data-driven refinement of rules.

This quintessential Indian approach is applied not just to math, but to practically every field of human activity, including economics, commerce, art, medicine, law, ethics, and the diverse dharmic religions of India, including Hinduism and Buddhism. Panini's Sanskrit is a great example of this approach.

Panini, the famous Sanskrit grammarian (along with Patanjali) is perhaps the most influential human that much of the world does not know much about. His fundamental contributions to linguistics more than 2000 years ago continues to transform the world in many ways even today. Noted Indian commentator, Rajeev Srinivasan, has recently penned a wonderful article on Panini and Sanskrit. You can learn more about Panini's works by reading Dr. Subhash Kak's (OK State Univ) research papers (samples are here and here). This blog was in part, triggered by this article, and talks about Sanskrit and its contextual optimizations.

Abstract: Sanskrit recognizes the importance of context. Two examples that show how Sanskrit is optimized depending on the context, in two entirely opposite directions, is shown below.

Optimization-1. The grammar is designed to be entirely context-free as Rajeev Srinivasan's article explains, and anticipated the 'grammar' of today's high-level computing language by more than 2000 years: precise with zero room for ambiguity of nominal meaning. To the best of my knowledge, punctuation marks are not required, and order of the words can be switched without breaking down, although there may be personal preferences for some orders over the others, and the sentence remains unambiguously correct. An optimization goal here therefore is to generate a minimum (necessary and sufficient) number of rules that result in an maximally error-free production and management of a maximal number possible variations of Sanskrit text. In this case, Panini appears to have achieved the ultimate goal of generating a minimal set of rules that will produce error-free text, forever. There are other well-known optimizations hidden in the structure and order of the Sanskrit alphabet - more on that later.

Optimization-2. The final interpretation of many keywords in Sanskrit ARE contextual. Which means there are multiple, related interpretations for some words that have a nominal/generic meaning, but you have to optimize the final interpretation at run-time by examining the context of usage, to recover the most suitable specific choice. If the first optimization helped eliminate fallibility, this second optimization in a sense re-introduces a limited fallibility and a degree of uncertainty and freedom by design! This feature has encouraged me to reflect (recall Ganesha and Veda Vyasa), develop a situational awareness while reading, pay attention to the vibrations of the words, and grasp the context of keywords employed, rather than mechanically parse words and process sentences in isolation. A thoughtful Sanskrit reader who recognizes this subtle optimization comes away with a deeper understanding. For example, Rajiv Malhotra, in his book 'Being Different' (now in the top-10 list of Amazon's books on metaphysics) gives us the example of 'Lingam'. This can mean 'sign', 'spot', 'token', 'emblem', 'badge', etc, depending on the context. Apparently, there are at least 16 alternatives usages for 'Lingam' of which one best suits a given context is picked, and not simply selected at random. And of course, the thousand contextual names ('Sahasranamam') for Vishnu is well known in India. Some well-known western and Indian 'indologists' have ended up producing erroneous, and often tragic translations of Sanskrit text either because they failed to recognize this second optimization, or because they misused this scope for optimization to choose a silly interpretation, leading to comic or tragic conclusions.

Again, this contextual optimization approach by the ancient Indians is not just restricted to Sanskrit, but is employed gainfully in many areas, including classical arts, management, healthcare, ethics, etc., and of course dharmic religion. This contextual dharmic optimization has perhaps helped India in getting the best out of its diverse society, as well as keep its Sanskriti refreshed and refined over time. For example, the contextual ethics of dharma (ref: Rajiv Malhotra's book) has a universal pole as well as a contextual pole that allows the decision maker faced with a dilemma, to not blindly follow some hard-wired ideological copybook, but contemplate and wisely optimize his/her 'run-time' choice based on the context, such that himsa is minimized (dharma is maximally satisfied). Some posts in this space has tried to explore the applications of this idea'.

An earlier blog discussed a related example of seemingly opposite goals for contextual optimizations. When it came to mathematical algorithms, data, and linguistic rules in Sanskrit, a goal was to be brief and dense, minimize redundancy, and maximize data compression, so that for example, an entire Sine-value table or generating the first N decimals of Pi can be both encoded and decompressed elegantly using terse verse. Panini's 'Iko Yan Aci' in the Siva Sutras is a famous example of a super-terse linguistic rule. On the other hand, when it comes to preserving long-term recall and accuracy of transmission of Sanskrit word meanings as well as the precise vibrations of mantras (e.g. Vedic chants) that are critically linked to the 'embodied knowing' tradition of India, the aim appears to be one of re-introducing controlled data redundancy to maximize recall-ability, and error-reduction. This optimization enabled Sanskrit mantras to be accurately transmitted orally over thousands of years.

To summarize, contextual optimization is a powerful and universal dharmic approach that has been employed wisely by our Rishis, Acharyas, Gurus, and thinkers over centuries to help us communicate better, be more productive, healthier, creative, empathetic, scientific, ethical, and interact harmoniously with mutual respect.

update 11/22/2014: 'optimize the final interpretation at parse-time / read-time' is the intent for optimization-2, rather than the computer-science notion of 'interpretation at run time'.

The wonderful 'Philip McCord Morse Lecture' at the recently concluded INFORMS conference in San Francisco by Prof. Dimitris Bertsimas of MIT touched upon this point, and the 'conclusions' slide in the talk explained this idea really well. To paraphrase, 'tractability of a problem class is tied to the context - whether the instances you actually encounter can be solved well enough'. I mentioned the Sulba Sutras in that blog - a well known body of work that epitomizes the Indian approach to mathematics as Ganitha - the science of computation. The genius, Srinivasa Ramanujan, was a relatively recent and famous example of a mathematician hailing from this tradition. The Indian approach is often algorithmic and more about rule generation than infallible theorem proving. Not that Indians shied away from proof ('Pramaana'. For example, see 'Yuktibhasa'). As I understand it, this sequential process of discovery and refinement does not lose sleep over theoretical fallibility, and consists of:

a) in-depth empirical observation of, and a deep meditation on facts,

b) insightful rule generation,

c) iterative, data-driven refinement of rules.

This quintessential Indian approach is applied not just to math, but to practically every field of human activity, including economics, commerce, art, medicine, law, ethics, and the diverse dharmic religions of India, including Hinduism and Buddhism. Panini's Sanskrit is a great example of this approach.

Panini, the famous Sanskrit grammarian (along with Patanjali) is perhaps the most influential human that much of the world does not know much about. His fundamental contributions to linguistics more than 2000 years ago continues to transform the world in many ways even today. Noted Indian commentator, Rajeev Srinivasan, has recently penned a wonderful article on Panini and Sanskrit. You can learn more about Panini's works by reading Dr. Subhash Kak's (OK State Univ) research papers (samples are here and here). This blog was in part, triggered by this article, and talks about Sanskrit and its contextual optimizations.

Abstract: Sanskrit recognizes the importance of context. Two examples that show how Sanskrit is optimized depending on the context, in two entirely opposite directions, is shown below.

Optimization-1. The grammar is designed to be entirely context-free as Rajeev Srinivasan's article explains, and anticipated the 'grammar' of today's high-level computing language by more than 2000 years: precise with zero room for ambiguity of nominal meaning. To the best of my knowledge, punctuation marks are not required, and order of the words can be switched without breaking down, although there may be personal preferences for some orders over the others, and the sentence remains unambiguously correct. An optimization goal here therefore is to generate a minimum (necessary and sufficient) number of rules that result in an maximally error-free production and management of a maximal number possible variations of Sanskrit text. In this case, Panini appears to have achieved the ultimate goal of generating a minimal set of rules that will produce error-free text, forever. There are other well-known optimizations hidden in the structure and order of the Sanskrit alphabet - more on that later.

Optimization-2. The final interpretation of many keywords in Sanskrit ARE contextual. Which means there are multiple, related interpretations for some words that have a nominal/generic meaning, but you have to optimize the final interpretation at run-time by examining the context of usage, to recover the most suitable specific choice. If the first optimization helped eliminate fallibility, this second optimization in a sense re-introduces a limited fallibility and a degree of uncertainty and freedom by design! This feature has encouraged me to reflect (recall Ganesha and Veda Vyasa), develop a situational awareness while reading, pay attention to the vibrations of the words, and grasp the context of keywords employed, rather than mechanically parse words and process sentences in isolation. A thoughtful Sanskrit reader who recognizes this subtle optimization comes away with a deeper understanding. For example, Rajiv Malhotra, in his book 'Being Different' (now in the top-10 list of Amazon's books on metaphysics) gives us the example of 'Lingam'. This can mean 'sign', 'spot', 'token', 'emblem', 'badge', etc, depending on the context. Apparently, there are at least 16 alternatives usages for 'Lingam' of which one best suits a given context is picked, and not simply selected at random. And of course, the thousand contextual names ('Sahasranamam') for Vishnu is well known in India. Some well-known western and Indian 'indologists' have ended up producing erroneous, and often tragic translations of Sanskrit text either because they failed to recognize this second optimization, or because they misused this scope for optimization to choose a silly interpretation, leading to comic or tragic conclusions.

Again, this contextual optimization approach by the ancient Indians is not just restricted to Sanskrit, but is employed gainfully in many areas, including classical arts, management, healthcare, ethics, etc., and of course dharmic religion. This contextual dharmic optimization has perhaps helped India in getting the best out of its diverse society, as well as keep its Sanskriti refreshed and refined over time. For example, the contextual ethics of dharma (ref: Rajiv Malhotra's book) has a universal pole as well as a contextual pole that allows the decision maker faced with a dilemma, to not blindly follow some hard-wired ideological copybook, but contemplate and wisely optimize his/her 'run-time' choice based on the context, such that himsa is minimized (dharma is maximally satisfied). Some posts in this space has tried to explore the applications of this idea'.

An earlier blog discussed a related example of seemingly opposite goals for contextual optimizations. When it came to mathematical algorithms, data, and linguistic rules in Sanskrit, a goal was to be brief and dense, minimize redundancy, and maximize data compression, so that for example, an entire Sine-value table or generating the first N decimals of Pi can be both encoded and decompressed elegantly using terse verse. Panini's 'Iko Yan Aci' in the Siva Sutras is a famous example of a super-terse linguistic rule. On the other hand, when it comes to preserving long-term recall and accuracy of transmission of Sanskrit word meanings as well as the precise vibrations of mantras (e.g. Vedic chants) that are critically linked to the 'embodied knowing' tradition of India, the aim appears to be one of re-introducing controlled data redundancy to maximize recall-ability, and error-reduction. This optimization enabled Sanskrit mantras to be accurately transmitted orally over thousands of years.

To summarize, contextual optimization is a powerful and universal dharmic approach that has been employed wisely by our Rishis, Acharyas, Gurus, and thinkers over centuries to help us communicate better, be more productive, healthier, creative, empathetic, scientific, ethical, and interact harmoniously with mutual respect.

update 11/22/2014: 'optimize the final interpretation at parse-time / read-time' is the intent for optimization-2, rather than the computer-science notion of 'interpretation at run time'.

Saturday, October 25, 2014

Lassoing the Exponential

An abbreviated version was blogged for the INFORMS 2014 annual conference as 'Not Particularly Hard'.

-------------------------------------

While working on a new retail optimization problem a few weeks earlier, a colleague was a bit disappointed that it turned out to be NP-Hard. Does that make the work unpublishable? I don't know know, but unsolvable? No. The celebrated Traveling Salesman Problem (TSP) is known to be a difficult problem, yet Operations Researchers continue to solve incredibly large TSP instances to proven near-global optimality, and we routinely manage small TSP instances every time we lace our shoes. Why did I bring up laces? In a moment ...

Hundreds of problems that are known to be difficult are 'solved' routinely in industrial applications. In this practical context it matters relatively less what the theoretical worst-case result is, as long as the real-life instances that show up can be managed well enough, and invariably, the answer to this latter question is a resounding YES. The worst-case exponential but elegant 'Simplex method' continues to be a core algorithm in modern-day optimization software packages.

This issue of contextual optimization is not a new one. For some ancient people who first came across 'irrational' numbers, it was apparently a moment of uneasiness: how to 'exactly' measure quantities that were seemingly beyond rational thought. For some others, it was not much of an issue. Indeed, there is an entire body of Ganitha (the science of calculations, or mathematics) work in Sanskrit, the 'Sulba Sutras', almost 3000 years old, where irrational numbers show up without much ado. 'Sulba' means rope or lace or cord. If we want to calculate the circumference of a circle of radius r, we can simply use (2πr) along with an approximation for 'π' that is optimally accurate, i.e., good enough in the context of our application. If we we did not have a good enough value for π, we could literally get around the problem: simply draw a circle of radius r, and line up a Sulba along its circumference to get our answer. For really large circles, we can use a scaled model instead of ordering many miles of Sulba. Not particularly hard. Encountering a really difficult optimization problem can be a positive thing, depending on how we respond to it. Often, there are alternative approaches to business problems that at first glance, appear to have insufficient data: this tempts us to throw in the towel and send the problem back to the customer and say "infeasible" or "unbounded". Instead, we can use a Sulba and Lasso our decision variables. This could well be an ORMS motto:

"When the going gets NP-Hard, Operations Researchers get going" :)

Saturday, September 6, 2014

Jugaad for Moto-E Video chat

I recently got a family member in India a really cheap but very useful smartphone, the Motorola Moto-E.

A problem is that this phone only has a front-facing camera. Moto-E holders have to turn the phone around for us to see them during a video chat, depriving them of a view. Of course, if both callers are using Moto-Es, video-chats get a bit more frustrating. A simple Jugaad to fix this is to have the Moto-E holders sit in front of a dressing mirror during the video-chat. I'm sure somebody figured this out long ago but I got a small kick out of it.

A problem is that this phone only has a front-facing camera. Moto-E holders have to turn the phone around for us to see them during a video chat, depriving them of a view. Of course, if both callers are using Moto-Es, video-chats get a bit more frustrating. A simple Jugaad to fix this is to have the Moto-E holders sit in front of a dressing mirror during the video-chat. I'm sure somebody figured this out long ago but I got a small kick out of it.

Saturday, August 30, 2014

The Best Decisions are Optimally Delayed

The lessons learned from the last few years of practice have convinced me that analytics and OR (OR = Operations Research), or at least MyOR is mainly about learning the art and science of engineering an optimally delayed response. Good analytics produces an optimally delayed response.

But why introduce a delay in the first place? Isn't faster always better? 'Science of better' does not always mean 'science of faster'. From age-old proverbs we find that in between 'haste makes waste or knee-jerk reaction' and being 'too clever by half' lies 'look before you leap'. If we view Einstein from an OR perspective: "Make things as simple as possible, but not simpler", the reason seems clearer. We must make situation-aware and contextually-optimal decisions as fast as possible, or as slow as necessary, but not faster, or slower, i.e., there exists a nonzero optimal delay for every response decision. A middle path in between a quick-and-shallow suboptimal answer, or a slow-and-unwieldy 'over-optimized' recipe. Of course, one must work hard during this delay to maximize the impact of the response, and put Parkinson's law to good use, as suggested below:

The history of the 'optimal delay' is many thousand years old, going back to the writing of the world's longest dharmic poem, the Mahabharata, which also includes within it, the Bhagavad Gita, one of the many sacred texts of Hinduism.

(pic link: http://www.indianetzone.com)

The story about how this epic came to be written is as follows:

Krishna Dvaipana (the Veda Vyasa) wanted a fast but error-free encoding of the epic that could be told and re-told to generations thereafter. The only feasible candidate for this task was the elephant-faced and much beloved Ganesha, the Indian god of wisdom and knowledge, and remover of obstacles. The clever Ganesha agreed to be the amanuensis for this massive project on the condition that he must never be delayed by the narrator, and must be able to complete the entire epic in ceaseless flow. Veda Vyasa accepted but had his own counter-condition: Ganesha should first grasp the meaning of what he was writing down. This resulted in a brilliant equilibrium.

Veda Vyasa composed the Mahabharata in beautiful, often dense verse that Ganesha had to decipher and comprehend even as he was writing it down without lifting the piece of his tusk that he had broken off to inscribe, from the palm leaves. If Ganesha was too slow, it would potentially give Vyasa the opportunity to increase the density and frequency of incoming verses that may overload even his divine cognitive rate. If he went too fast, he would risk violating Vyasa's constraint. Similarly, if Vyasa was too slow, he would violate Ganesha's constraint. If he went too fast, his verse would lose density and risk becoming error-prone, and of course, then Ganesha would not have to think much and perhaps write it down even faster. Imagine if you will, a Poisson arrival of verses from Vyasa divinely balanced by the exponentially distributed comprehension times of Ganesha. Writer and composer optimally delayed each other to produce the greatest integral epic filled with wisdom ever known; written ceaselessly in spell-binding Sanskrit verse, without error, and flowing ceaselessly to this day without pause.

I can think of no better way to celebrate Ganesha Chathurthi than to recall and apply this lesson in everyday life.

But why introduce a delay in the first place? Isn't faster always better? 'Science of better' does not always mean 'science of faster'. From age-old proverbs we find that in between 'haste makes waste or knee-jerk reaction' and being 'too clever by half' lies 'look before you leap'. If we view Einstein from an OR perspective: "Make things as simple as possible, but not simpler", the reason seems clearer. We must make situation-aware and contextually-optimal decisions as fast as possible, or as slow as necessary, but not faster, or slower, i.e., there exists a nonzero optimal delay for every response decision. A middle path in between a quick-and-shallow suboptimal answer, or a slow-and-unwieldy 'over-optimized' recipe. Of course, one must work hard during this delay to maximize the impact of the response, and put Parkinson's law to good use, as suggested below:

#orms methods often *delay* product response to find superior answers. Tricky to pick optimal (delay, improvement) pair :)

— (@dualnoise) August 1, 2014

See this old post on 'optimally delaying an apology' to maximize benefit to the recipient, or recall the best players of every sport being able to delay their response by those few milliseconds to produce moments of magic, or Gen. Eisenhower delaying the call to launch D-Day. In the same way, a good OR/analytics practitioner will instinctively seek an optimal delay. For an example of this idea within an industrial setting, read this excellent article by IBM Distinguished Engineer J. F. Puget on taxicab dispatching that he shared in response to the above tweet. Implication: If your analytics system is responding faster than necessary, then slow it down a bit to identify smarter decision options. The 'slower' version of this statement is more obvious and is a widely used elevator pitch to sell high-performance analytics and optimization tools.The history of the 'optimal delay' is many thousand years old, going back to the writing of the world's longest dharmic poem, the Mahabharata, which also includes within it, the Bhagavad Gita, one of the many sacred texts of Hinduism.

The story about how this epic came to be written is as follows:

Krishna Dvaipana (the Veda Vyasa) wanted a fast but error-free encoding of the epic that could be told and re-told to generations thereafter. The only feasible candidate for this task was the elephant-faced and much beloved Ganesha, the Indian god of wisdom and knowledge, and remover of obstacles. The clever Ganesha agreed to be the amanuensis for this massive project on the condition that he must never be delayed by the narrator, and must be able to complete the entire epic in ceaseless flow. Veda Vyasa accepted but had his own counter-condition: Ganesha should first grasp the meaning of what he was writing down. This resulted in a brilliant equilibrium.

Veda Vyasa composed the Mahabharata in beautiful, often dense verse that Ganesha had to decipher and comprehend even as he was writing it down without lifting the piece of his tusk that he had broken off to inscribe, from the palm leaves. If Ganesha was too slow, it would potentially give Vyasa the opportunity to increase the density and frequency of incoming verses that may overload even his divine cognitive rate. If he went too fast, he would risk violating Vyasa's constraint. Similarly, if Vyasa was too slow, he would violate Ganesha's constraint. If he went too fast, his verse would lose density and risk becoming error-prone, and of course, then Ganesha would not have to think much and perhaps write it down even faster. Imagine if you will, a Poisson arrival of verses from Vyasa divinely balanced by the exponentially distributed comprehension times of Ganesha. Writer and composer optimally delayed each other to produce the greatest integral epic filled with wisdom ever known; written ceaselessly in spell-binding Sanskrit verse, without error, and flowing ceaselessly to this day without pause.

I can think of no better way to celebrate Ganesha Chathurthi than to recall and apply this lesson in everyday life.

Saturday, July 5, 2014

Brief look at Soccer versus Basketball Foul Models

In Basketball, fouls are allowed up to a limit, and results in automatic penalties that turn into potential baskets and/or getting fouled out, once the limit is breached. Therefore, hoops foul-rules represent a capacitated model that comes with a marginal cost (dual value) and players have to pay a shadow price per foul and have to smartly manage this dual cost along as the game reaches its climax. In soccer, however, the foul model is uncapacitated, with little penalty unless it is a hard foul that invites a yellow or red card. In fact, soccer appears provide a net incentive to commit cynical and tactical fouls. Consequently, you often end up with foul-a-minute matches like the Brazil-Colombia world cup clash, where the game stops every few minutes and kills the momentum.

It's just not cricket

Soccer, like cricket, leaves part of the how ethically the game is played to the players. Cricket does this even more, and I personally love this decentralized approach that requires every individual to take responsibility for their actions to protect the integrity of their sport and their character (since it represents a dharma-karma like way of dealing with ethics), but off late, we see in both sports that this 'spirit of the game' has been sacrificed precisely when the stakes are the highest. Therefore, some centralized penalty approach that the American way of life prefers may be brought in to restore balance, unless the teams can reform themselves. I personally prefer a capacitated foul model in soccer.

It's just not cricket

Soccer, like cricket, leaves part of the how ethically the game is played to the players. Cricket does this even more, and I personally love this decentralized approach that requires every individual to take responsibility for their actions to protect the integrity of their sport and their character (since it represents a dharma-karma like way of dealing with ethics), but off late, we see in both sports that this 'spirit of the game' has been sacrificed precisely when the stakes are the highest. Therefore, some centralized penalty approach that the American way of life prefers may be brought in to restore balance, unless the teams can reform themselves. I personally prefer a capacitated foul model in soccer.

Wednesday, May 21, 2014

Predicting the Indian Elections - A Win for Data Science

The exit polls for the recently concluded Indian elections threw up a spectrum of results. Several Cable-TV networks ran their own polls, most of their numbers falling within a seemingly reasonable range, barring a public research group called 'Todays Chanakya', whose numbers were literally off the charts, predicting a massive win for Narendra Modi. People began to take averages of these polls to come up with an 'expected result', and many of these 'poll of polls' excluded TC's result as an outlier, discarding it as unbelievable.

I spent quite a bit of time looking at the meager information provided in the todayschanakya.com (TC) website before the results were announced. Buzz-words aside, what caught my attention was the meticulous attention they paid toward obtaining a representative data sample in every single constituency. Their prior track record in predicting elections in India was simply stunning. In a recent state election too, their prediction was an outlier, and turned out to be accurate. This data sampling step is important, especially given the incredibly diverse nature of India's population. Translating projected vote-shares into actual seats won in India's 'first past the post' system is an incredibly daunting problem. If your sample is even slightly messed up, then your seat predictions can be way off, regardless of the sophistication of the predictive analytics you employ. Human judgment and domain expertise is critical.

As this useful blog points out, it's not about 'sampling error', but sampling bias. And once we see this, it is not difficult to see why the English TV networks of India, virtually every single one a willing and well-compensated participant in the witch hunt of Narendra Modi since 2002, miserably fail in their predictions, time and again. Their reporting has rarely been fact-driven, and is usually ratings-driven. Few, if any on their payroll, are trained in the rigorous scientific method. Reporters appear to be hired based on ideology, west-accented English-speaking ability, and political connections rather than merit or technical proficiency. So, when by force of habit, you look for a sample that you like, then you will only get the predictions you want viewers to see in your TV shows, which has little to do with reality. The media witch hunt against Modi, like their exit polls, as is now known, was never fact-driven from day one. It was doomed from the start. After this election, few will take their "predictions" seriously again unless they reform.

TC's predictions were quite accurate. Modi indeed won in a landslide as they predicted, with the incumbent Nehru dynasty (aka "UPA" coalition) whose corruption almost surely qualifies as a crime against humanity, getting deservedly annihilated. On election day, at around 1-2:00 AM EST, while following the election trends, UPA was leading in about a hundred of the 543 seats up for grabs, way higher the predicted range of 61-79 seats that TC predicted they would get. However, as the day progressed, it was amazing to see UPA's leads petering out one by one, as if an invisible rope was magically pulling it back into the predicted range. Statistical destiny. Only two people appeared to be convinced about the result before May 16. TC, who adopted a scientific approach to gathering and analyzing data, and Narendra Modi, who created the history in the first place. Both of them dared to be different and put their reputations on the line, and were worthy winners.

This election result and Modi becoming the Prime Minister of India has taught many of us a scientific lesson. Data science is about being guided by facts, not emotion, or prejudiced opinion, or preferred outcome. Carefully constructed fact-driven methods are less likely to fail. Gujarat's development, both rural and urban, spearheaded by Modi for 12 years, is real, and cannot be falsified. It happened, and it is there to be seen regardless of what the New York Times tells you. I blogged in 2012 that the heavy-lifting done in Gujarat may pay rich dividends in the future. The people there lived that development and they knew, and the thousands of migrants returned from Gujarat to other states to speak about their experience there. TC's data sample accurately reflected this reality. The media-heads sitting in Delhi, London, and New York were high on ideology-meth, low on fact. Few visited the state of Gujarat to make a factual assessment. Some of the open-minded critics who did, ended up becoming Modi's strongest supporters. Not surprisingly, his fact-driven campaign won him every single parliamentary seat there. The amazing number of Indians cutting across religious, class, language, age, gender, and geographical 'barriers', who voted for Modi, too cannot be brushed aside. Facts cannot be ignored until time-travel becomes practical.

And here's another prediction, an easy one. Modi will probably become India's best, and most unifying leader since Mahatma Gandhi, if he isn't already that. If, as the Nehru dynasty says, "power is poison", India has surely found their Shiva.

I spent quite a bit of time looking at the meager information provided in the todayschanakya.com (TC) website before the results were announced. Buzz-words aside, what caught my attention was the meticulous attention they paid toward obtaining a representative data sample in every single constituency. Their prior track record in predicting elections in India was simply stunning. In a recent state election too, their prediction was an outlier, and turned out to be accurate. This data sampling step is important, especially given the incredibly diverse nature of India's population. Translating projected vote-shares into actual seats won in India's 'first past the post' system is an incredibly daunting problem. If your sample is even slightly messed up, then your seat predictions can be way off, regardless of the sophistication of the predictive analytics you employ. Human judgment and domain expertise is critical.

As this useful blog points out, it's not about 'sampling error', but sampling bias. And once we see this, it is not difficult to see why the English TV networks of India, virtually every single one a willing and well-compensated participant in the witch hunt of Narendra Modi since 2002, miserably fail in their predictions, time and again. Their reporting has rarely been fact-driven, and is usually ratings-driven. Few, if any on their payroll, are trained in the rigorous scientific method. Reporters appear to be hired based on ideology, west-accented English-speaking ability, and political connections rather than merit or technical proficiency. So, when by force of habit, you look for a sample that you like, then you will only get the predictions you want viewers to see in your TV shows, which has little to do with reality. The media witch hunt against Modi, like their exit polls, as is now known, was never fact-driven from day one. It was doomed from the start. After this election, few will take their "predictions" seriously again unless they reform.

TC's predictions were quite accurate. Modi indeed won in a landslide as they predicted, with the incumbent Nehru dynasty (aka "UPA" coalition) whose corruption almost surely qualifies as a crime against humanity, getting deservedly annihilated. On election day, at around 1-2:00 AM EST, while following the election trends, UPA was leading in about a hundred of the 543 seats up for grabs, way higher the predicted range of 61-79 seats that TC predicted they would get. However, as the day progressed, it was amazing to see UPA's leads petering out one by one, as if an invisible rope was magically pulling it back into the predicted range. Statistical destiny. Only two people appeared to be convinced about the result before May 16. TC, who adopted a scientific approach to gathering and analyzing data, and Narendra Modi, who created the history in the first place. Both of them dared to be different and put their reputations on the line, and were worthy winners.

This election result and Modi becoming the Prime Minister of India has taught many of us a scientific lesson. Data science is about being guided by facts, not emotion, or prejudiced opinion, or preferred outcome. Carefully constructed fact-driven methods are less likely to fail. Gujarat's development, both rural and urban, spearheaded by Modi for 12 years, is real, and cannot be falsified. It happened, and it is there to be seen regardless of what the New York Times tells you. I blogged in 2012 that the heavy-lifting done in Gujarat may pay rich dividends in the future. The people there lived that development and they knew, and the thousands of migrants returned from Gujarat to other states to speak about their experience there. TC's data sample accurately reflected this reality. The media-heads sitting in Delhi, London, and New York were high on ideology-meth, low on fact. Few visited the state of Gujarat to make a factual assessment. Some of the open-minded critics who did, ended up becoming Modi's strongest supporters. Not surprisingly, his fact-driven campaign won him every single parliamentary seat there. The amazing number of Indians cutting across religious, class, language, age, gender, and geographical 'barriers', who voted for Modi, too cannot be brushed aside. Facts cannot be ignored until time-travel becomes practical.

And here's another prediction, an easy one. Modi will probably become India's best, and most unifying leader since Mahatma Gandhi, if he isn't already that. If, as the Nehru dynasty says, "power is poison", India has surely found their Shiva.

Wednesday, May 14, 2014

Indian elections 2014: Long words, short story

Can long, archaic words be used to maximize overall brevity (and levity)? Take the 2014 Indian general elections that recently concluded. Although the final results will come out on May 16 (the amazing Narendra Modi as Prime Minister), exit polls already give us this clear-enough picture:

India has understood that the phoney "Idea of India" brand of secularism is nothing but antidisestablishmentarianism in disguise, and we are witness to the historical floccinaucinihilipilification of the Nehru-dynasty by the Indian voter.

India has understood that the phoney "Idea of India" brand of secularism is nothing but antidisestablishmentarianism in disguise, and we are witness to the historical floccinaucinihilipilification of the Nehru-dynasty by the Indian voter.

Tuesday, March 4, 2014

Traveling Salesman Problem in a Portrait?

I'd planned to stop blogging until May this year to do my small bit in helping Narendra Modi become the next Prime Minister of India in the Indian general elections to be held very soon - one that will determine the future of my family there, as well as the destiny of 1.2 Billion Indians. India has suffered from a curse of culpable silence in the last ten years, but now it seems, that is changing, thanks to inspiring examples like these that asks people to come out and 'Vote for India' (thanks to @sarkar_swati, faculty at U-Penn, for sharing this video).

------------------------------------------------------------------------------

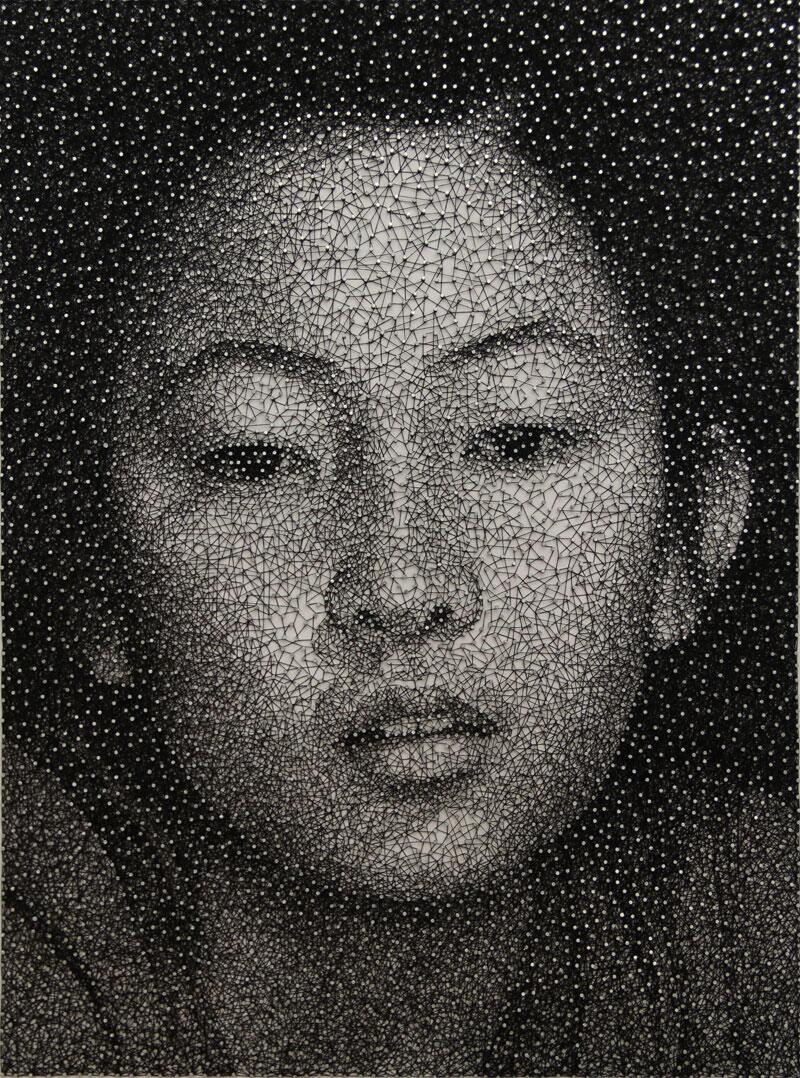

By sheer coincidence, this brief blog, like the previous one, is related to Asian (Japanese) art, and one i felt 'compelled' to do. Thanks to @SimoneCerbolini for sharing this beautiful portrait on twitter. What is interesting about this art is that it was done, as Simone tweets, using "a single thread wrapped around thousands of nails. Artwork "Mana" by Kumi Yamashita"

The artist, Kumi Yamashita, has a facebook page, and here is another picture from there.

The effect is stunning, and one marvels at the human 'cognitive shift' that convinces us that within this collection of thread and nails, is a lady. Here is a brilliant talk by neuroscientist V. S. Ramachandran (Director, Center for Brain and Cognition, UC, San Diego) on 'Aesthetic Universals and the Neurology of Hindu Art' that explains this in depth.

From the operations research perspective, we gravitate toward the mathematical problem hidden in this portrait: determining the least length of thread to traverse through all these nails. A mathematical optimization model that can be used to answer this question is the celebrated 'Traveling Salesman Problem' (TSP), which is known to be difficult to solve, in theory. In practice, however, extremely large instances have been solved to provable optimality.

Here is another page that displays a collection of pictures from the artist's 'constellation series'. It also includes this ultra-close up that lets us see how the threading really progresses at the micro-level.

Each nail is traversed multiple times, and a greater density of thread is used to create a darker shade (e.g. the eye and the brow). Additionally, there appears to be a greater density of nails in that area. Can a thread-traversal path generated by a TSP solver (e.g. concorde) produce a similar effect to the eye and the brain? I'm not sure, although it may still produce 'a reasonable picture of a lady'. If the density of nails is increased in these areas, then perhaps the TSP-artwork may do a good job. Alternatively, it may be possible to modify the TSP network structure to induce such an effect.

------------------------------------------------------------------------------

By sheer coincidence, this brief blog, like the previous one, is related to Asian (Japanese) art, and one i felt 'compelled' to do. Thanks to @SimoneCerbolini for sharing this beautiful portrait on twitter. What is interesting about this art is that it was done, as Simone tweets, using "a single thread wrapped around thousands of nails. Artwork "Mana" by Kumi Yamashita"

The artist, Kumi Yamashita, has a facebook page, and here is another picture from there.

The effect is stunning, and one marvels at the human 'cognitive shift' that convinces us that within this collection of thread and nails, is a lady. Here is a brilliant talk by neuroscientist V. S. Ramachandran (Director, Center for Brain and Cognition, UC, San Diego) on 'Aesthetic Universals and the Neurology of Hindu Art' that explains this in depth.

From the operations research perspective, we gravitate toward the mathematical problem hidden in this portrait: determining the least length of thread to traverse through all these nails. A mathematical optimization model that can be used to answer this question is the celebrated 'Traveling Salesman Problem' (TSP), which is known to be difficult to solve, in theory. In practice, however, extremely large instances have been solved to provable optimality.

Here is another page that displays a collection of pictures from the artist's 'constellation series'. It also includes this ultra-close up that lets us see how the threading really progresses at the micro-level.

Each nail is traversed multiple times, and a greater density of thread is used to create a darker shade (e.g. the eye and the brow). Additionally, there appears to be a greater density of nails in that area. Can a thread-traversal path generated by a TSP solver (e.g. concorde) produce a similar effect to the eye and the brain? I'm not sure, although it may still produce 'a reasonable picture of a lady'. If the density of nails is increased in these areas, then perhaps the TSP-artwork may do a good job. Alternatively, it may be possible to modify the TSP network structure to induce such an effect.

Saturday, January 25, 2014

Building the Unsolvable Maze

I came across a tweet via Simon Singh, famous writer of books based on math-topics. I've read a couple of them: 'Fermat's last theorem' and 'The code book'. His tweet points to a picture of an amazing maze hand-drawn over 30 years ago in Japan. Although it is supposed to be 'unsolvable', some comments there claim that it could be solved very quickly if it was made publicly available. Among the very first papers I read after coming to the U.S to study traffic engineering (to understand the reasons for India's chaotic, maze like traffic) was about Moore's algorithm entitled "shortest path through a maze". Mathematically, the shortest path problem formulation has a couple of properties of small interest in the context of this discussion. It has no duality gap, and is totally unimodular: It is sufficient to solve the continuous 'relaxation' to recover an integral optimal solution to the primal or dual formulation. Wikipedia has a page on maze-solving algorithms. Interesting as the optimization problem of finding an 'optimal route' to 'escape' this maze is, a more interesting question to me personally was: why would someone hand-build such an intricate maze over years; and then why not claim any credit for it? I have tried to interpret this based on my understanding of the Indian way.

(source link and main article at: http://imgur.com/gallery/4kyvVVb)

The intense concentration required for such a task is surely daunting: to at once elevate one's consciousness while also dissolving one's aham (ego) that hinders the mind from systematically growing a complex maze whose paths increase rapidly over time as more forks and merges are constructed. Paths that stop even as they begin, paths that ultimately lead nowhere, paths where you travel for a while, only to discover that you are back where you were before... and then after a lot of calm, refined, and introspective searching (not suffering), finding a path that leads one to satya (ultimate reality/truth) that transcends the maya of the maze that held us in its thrall. A path that dissolves the noisy duality between the world within the maze and without, uniting them harmoniously into a unified whole, even as the space enclosed within the maze maintains a provisional identity within this overall unity. And then perhaps a realization that there could be a pluralism of such (alternative optimal) transcendental paths to satya. A harmonious unity within multiplicity that celebrates its diversity, rather than a synthesized unity derived by optimizing the goal of orderly sameness. The latter produces an efficient monoculture, but one that invariably regards pluralism as a seed of chaos. The former, integral unity best represents the nature of the underlying philosophical unity of India that has continually preserved and refined its dharma civilization over several thousand years. This forms the dharmic basis for any reasonable 'idea of India'. I look forward to reading Rajiv Malhotra's new book on this subject.

Journeys that traverse such a path have led to amazing discoveries that enlightened the world, and will continue to do so. Perhaps it produced this captivating art that simultaneously appeals to the casual observer, the artist, the seeker, and the analyst alike; yet each of us seeing only a partial facet of its underlying truth. A work of art to which its 'creator' deliberately did not append a signature to, and claim ownership of, perhaps unwilling to disturb it's harmony. That is the way of the Yogi.

Dedicated to Rajiv Malhotra on the occasion of India's 65th republic day.Thank you.

(source link and main article at: http://imgur.com/gallery/4kyvVVb)

The intense concentration required for such a task is surely daunting: to at once elevate one's consciousness while also dissolving one's aham (ego) that hinders the mind from systematically growing a complex maze whose paths increase rapidly over time as more forks and merges are constructed. Paths that stop even as they begin, paths that ultimately lead nowhere, paths where you travel for a while, only to discover that you are back where you were before... and then after a lot of calm, refined, and introspective searching (not suffering), finding a path that leads one to satya (ultimate reality/truth) that transcends the maya of the maze that held us in its thrall. A path that dissolves the noisy duality between the world within the maze and without, uniting them harmoniously into a unified whole, even as the space enclosed within the maze maintains a provisional identity within this overall unity. And then perhaps a realization that there could be a pluralism of such (alternative optimal) transcendental paths to satya. A harmonious unity within multiplicity that celebrates its diversity, rather than a synthesized unity derived by optimizing the goal of orderly sameness. The latter produces an efficient monoculture, but one that invariably regards pluralism as a seed of chaos. The former, integral unity best represents the nature of the underlying philosophical unity of India that has continually preserved and refined its dharma civilization over several thousand years. This forms the dharmic basis for any reasonable 'idea of India'. I look forward to reading Rajiv Malhotra's new book on this subject.

Journeys that traverse such a path have led to amazing discoveries that enlightened the world, and will continue to do so. Perhaps it produced this captivating art that simultaneously appeals to the casual observer, the artist, the seeker, and the analyst alike; yet each of us seeing only a partial facet of its underlying truth. A work of art to which its 'creator' deliberately did not append a signature to, and claim ownership of, perhaps unwilling to disturb it's harmony. That is the way of the Yogi.

Dedicated to Rajiv Malhotra on the occasion of India's 65th republic day.Thank you.

Sunday, January 19, 2014

The King and the Vampire - 3: The Flaw of Optimizing-On-Average

This is the third episode of the 'King Vikram and the Vetaal' series.

(link: juneesh.files.wordpress.com)

Dark was the night and weird the atmosphere. It rained from time to time. Eerie laughter of ghosts rose above the moaning of jackals. Flashes of lightning revealed fearful faces. But King Vikram did not swerve. He climbed the ancient tree once again and brought the corpse down. With the corpse lying astride on his shoulder, he began crossing the desolate cremation ground. "O wise King, it seems to me that your ministers are sometimes too quick in taking policy decisions based on an average scenario, ignoring the distribution. But it is better for you to know that such decisions invariably results in complaints. Let me cite an instance. Pay your attention to my narration. That might bring you some relief as you trudge along," said the Betaal, which possessed the corpse.

The retailing vampire went on:

Once there lived a retailer who always optimized his scarce resource constrained planning problems based on an average planning week. It was a quick and easy heuristic, and he claimed that it worked just fine. One day, an OR practitioner challenged this assumption, quoting points from the well-known 'flaw of averages' book, and said that with a bit more effort, and without increasing the problem size much, one can generate true optimal merchandising decisions by including all scenarios, using CPLEX. This would also be relatively more robust in reality compared to optimizing to the average. The retailer replied that while this issue was of theoretical importance, it did not matter much in practice, unless he saw some hard evidence. The practitioner decided to make an empirical point and proceeded to evaluate a number of historical instances by evaluating the recommendations generated by these two approaches over all the scenarios. In 75% of the instances, the practitioner's method yielded relatively better metrics, while in 25% of the cases, the retailer's average method did better. The retailer took one look at the results and said that the experimental setup was erroneous, inconclusive, and remained unconvinced.

(link: juneesh.files.wordpress.com)

Dark was the night and weird the atmosphere. It rained from time to time. Eerie laughter of ghosts rose above the moaning of jackals. Flashes of lightning revealed fearful faces. But King Vikram did not swerve. He climbed the ancient tree once again and brought the corpse down. With the corpse lying astride on his shoulder, he began crossing the desolate cremation ground. "O wise King, it seems to me that your ministers are sometimes too quick in taking policy decisions based on an average scenario, ignoring the distribution. But it is better for you to know that such decisions invariably results in complaints. Let me cite an instance. Pay your attention to my narration. That might bring you some relief as you trudge along," said the Betaal, which possessed the corpse.

(pic source link: pryas.wordpress.com)

The retailing vampire went on:

Once there lived a retailer who always optimized his scarce resource constrained planning problems based on an average planning week. It was a quick and easy heuristic, and he claimed that it worked just fine. One day, an OR practitioner challenged this assumption, quoting points from the well-known 'flaw of averages' book, and said that with a bit more effort, and without increasing the problem size much, one can generate true optimal merchandising decisions by including all scenarios, using CPLEX. This would also be relatively more robust in reality compared to optimizing to the average. The retailer replied that while this issue was of theoretical importance, it did not matter much in practice, unless he saw some hard evidence. The practitioner decided to make an empirical point and proceeded to evaluate a number of historical instances by evaluating the recommendations generated by these two approaches over all the scenarios. In 75% of the instances, the practitioner's method yielded relatively better metrics, while in 25% of the cases, the retailer's average method did better. The retailer took one look at the results and said that the experimental setup was erroneous, inconclusive, and remained unconvinced.

So tell me King Vikram, Why did the retailer conclude the test setup was faulty? Was he correct in this assessment? Which method is better in the retailer's context? Answer me if you can. Should you keep mum though you may know the answers, your head would roll off your shoulders!"

King Vikram was silent, then closed his eyes, as if going into a Yogic trance in a moment of intense meditation. He reopened his eyes soon enough along with a smile, and then spoke: "Vetaal, unlike the last time, this one is pretty easy, so let's take the first question first.

1. The retailer felt the experimental setup was flawed because, if the practitioner's method truly yielded optimal solutions as claimed, then it should have done just as well or better in 100% of the instances.

2. However, the retailer was wrong because his old method optimized against constraints based on an average scenario. There is no guarantee that his decisions will be feasible to the original problem, over all scenarios. Therefore, in the instances where the old method did better, the recommendations had to be infeasible, since we cannot, of course, find a feasible solution better than an optimal one.

3. So we now know that the old method generated infeasible solutions at least 25% of the time. If we ignore these cases where we know it was surely infeasible, and look at the remaining 75% of the cases where it may have been feasible, it did worse 100% of the time due to a combination of suboptimality and overly-constrained instances.

In every case, the old method was either infeasible or suboptimal. The average-based heuristic must be discarded.

King Vikram was silent, then closed his eyes, as if going into a Yogic trance in a moment of intense meditation. He reopened his eyes soon enough along with a smile, and then spoke: "Vetaal, unlike the last time, this one is pretty easy, so let's take the first question first.

1. The retailer felt the experimental setup was flawed because, if the practitioner's method truly yielded optimal solutions as claimed, then it should have done just as well or better in 100% of the instances.

2. However, the retailer was wrong because his old method optimized against constraints based on an average scenario. There is no guarantee that his decisions will be feasible to the original problem, over all scenarios. Therefore, in the instances where the old method did better, the recommendations had to be infeasible, since we cannot, of course, find a feasible solution better than an optimal one.

3. So we now know that the old method generated infeasible solutions at least 25% of the time. If we ignore these cases where we know it was surely infeasible, and look at the remaining 75% of the cases where it may have been feasible, it did worse 100% of the time due to a combination of suboptimality and overly-constrained instances.

In every case, the old method was either infeasible or suboptimal. The average-based heuristic must be discarded.

No sooner had King Vikram concluded his answer than the vampire, along with the corpse, gave him the slip.

Subscribe to:

Posts (Atom)