The introductory discussion on prioritized business rules can be read here. This sequel treats Asimov's sci-fi laws of robotics as a set of prioritized business rules within a decision support system (DSS). We try to learn some 'field lessons' from stories that revolve around violations of one or more of these laws. The three (original) laws postulated in the 1940s and the stories cited in the Wikipedia page are used in this post:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

(Trivia: Robot Chitti in the Indian movie Endhiran is obviously not Asenion. A portion of the plot in the movie bears some resemblance to a story-line in 'Robots of Dawn')

Inadequacy of rules for complex systems

Anticipating 'bugs', and identifying/resolving potential conflicts (low-probability high consequence (LPHC) events in particular) within and between interacting components of a complex, synthesized system can be quite challenging. Recent examples: Boeing 787 Dreamliners, offshore oil rigs, or the western financial system. The positronic robot is no exception. Roger Clarke notes: "Asimov's Laws of Robotics have been a very successful literary device. Perhaps

ironically, or perhaps because it was artistically appropriate, the sum of

Asimov's stories disprove the contention that he began with: It is not possible

to reliably constrain the behavior of robots by devising and applying a set of

rules."

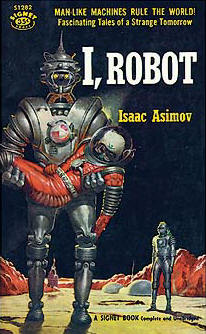

(picture link source: Wikipedia)

Hard Constraint Violation

Stories around initial designs of Asenion robots appear to revolve around hard-constraint satisfaction. Humans forget that a telepathic robot also protects humans from mental harm. It often prefers to tell the questioner what she/he wants to hear, violating the second law by not responding with facts, in order to satisfy the higher priority constraint of not injuring human minds 'by dashing hopes'. In the end, it runs into an irreconcilable constraint violation when it can neither speak nor be silent without hurting somebody mentally. It 'dies', taking its telepathic secrets with it.

An expensive decision support system that provides a 'null' response (or '42') after running for a long time can be irritating. There once was a user who loved a DSS when it worked, but would question slow-failures, asking why the application was designed to go through bugs and not around them.

Soft Constraint Violation

A more sophisticated robotic design in another story employs an optimization model. The laws are encoded as soft constraints using potential functions that take into account the level of importance of the order given, the priority of the law, and the degree of constraint 'violation'. Consequently, a 'truant' robot that is faced with a conflict between the second and third laws determines an optimal (equilibrium) solution to the corresponding weighted cumulative violation minimization problem that causes it to continually run around in a circle centered at the origin of the conflict. Doing so prevents the robot from completing a routine task it was assigned. Unfortunately, the robot is uninformed that the delay in the completion of this task is increasingly endangering human lives on the planet. An initial idea of the engineers to break this cycle by perturbing the potentials in the objective function merely shifts the optimum and alters the radius of the Robot's circle, but doesn't help them one bit. Finally, an engineer put his own life in visible jeopardy to inject high-intensity counter-potential (associated with the first law) into the objective function, and is ultimately able to resolve the conflict without loss of life.

This story reminds us of the problems associated with careless softening of 'hard' constraints that attenuates or randomizes the sensitivity of the model's response to priorities and scarce resources ("noisy duals"). The user is left with the unpleasant task of figuring out how to tweak the various 'potential' coefficients for a given problem instance in order to achieve the best results. In practice, an artful mixture of hard and soft constraints usually results in a more manageable and usefully responsive system.

Side-effects of Violation

Users often turn a few knobs in a DSS, look at the results, and wonder "why did this weird thing happen?". This leads to discussions that often unlocks hidden benefits for the customer. In an Asimov story, a robot determines that it is optimal for it to take over the management of delicate instrumentation that is vital to human survival on a planet. It deliberately disobeys humans and gangs up with other robots to permanently banish humans from the control room. The engineers are initially exasperated by this behavior ("is it a bug or a modeling issue?") before a data-driven realization of the life-saving benefit of 'violating' the lower-priority rule calms them. In practice, it is useful to identify unnecessary legacy constraints, if any, that are employed.

Conflict resolution is not just about figuring out what went wrong in the model - a relatively easy task given the excellent solver tools that are available in the market today. It also involves the task of usefully and legibly mapping this change in the model's response back to real world causals. The more interlinked the decision variables and business rules in the system, the more difficult this latter task can become.

In memory of the late Tamil writer Sujatha.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.